Monday, August 29

Kirino is home, though not yet unboxed; Menma awaits under my desk at the office. I have 4x1TB drives for each (I bought those last year to upgrade Nagi, but still haven't got around to it), and I just need to find some more memory for them, since they only come with 1GB.

Meanwhile at my day job I have four 32-processor Opteron servers each with 256GB of RAM and 54TB of disk, with two more such servers and 60 SSDs on their way. So right now I have a 128-processor cluster with 1TB of RAM, and that's going to grow by another 50% in the next month. This will make my life a lot easier.

I'm just waiting for someone at work to object to my virtual server names - so far I've named them after characters from Winnie the Pooh, Ghostbusters, Life on Mars / Ashes to Ashes, and My Little Pony. But by the time we're done we'll have something like 200 virtual servers, so we have to get names from somewhere.

Posted by: Pixy Misa at

09:08 PM

| Comments (6)

| Add Comment

| Trackbacks (Suck)

Post contains 180 words, total size 1 kb.

Sunday, August 28

Config:

Xeon E3 1230

16GB ECC RAM

2 x 1TB SATA disks

2 x 100GB+ SSD

Price:

Acmemicro: $1693 (purchase price) plus colo fees

SoftLayer (list): $1209/month (with 6TB bandwidth)

SoftLayer (discounted): $659/month (with 6TB bandwidth)

I/O Flood: $250/month (with 10TB bandwidth)

I haven't included RAID controllers. (I/O Flood do software RAID free; SoftLayer don't offer software RAID at all, and I've had it up to HERE with hardware RAID.) The SoftLayer price includes enterprisey WD disks and Micron SSDs, where I/O Flood offer desktop WD disks and Intel SSDs; again, neither offers any other option. And I can get two and a half servers at I/O Flood for the price of one at SoftLayer, so unless the hardware is completely flaky, I'll be able to deliver as good or better uptime and much better performance.

I'm getting some quotes from other providers as well; my plan is to move back out to two (or more) servers, at two different providers in two different locations, so that no single event can take us off the air.

It's not that SoftLayer is a bad provider - though exactly why I still see power outages on a server with redundant power supplies and a UPS is an unanswered question - it's just that they no longer seem to be in our marketplace.

Update: I have a quote from Tailor Made Servers now; they charge one-off fees for the SSDs, which makes them more expensive to start with than I/O Flood, but they've been in the business for years and I've used them before. One server at I/O Flood and another at TMS could work well, but they're both in the Southern US (Phoenix and Dallas, respectively) so I'm still looking to see if I can find someone good in the North-East.

Update: Got a quote back from JaguarPC. They're a little more expensive than I/O Flood, but not much, and have five datacentres available (Atlanta, New Jersey, Dallas, Phoenix, and Los Angeles) so I can situate the servers appropriately.

Posted by: Pixy Misa at

11:48 PM

| No Comments

| Add Comment

| Trackbacks (Suck)

Post contains 339 words, total size 2 kb.

Thursday, August 11

Now is the Winter of our disk content.*

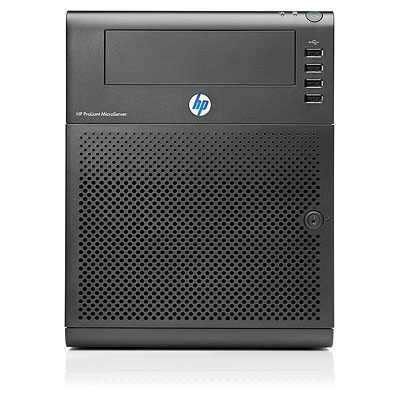

Just ordered a couple of HP servers. I've always wanted an HP server, and now I'm getting two.

Admittedly, they're very very small HP servers:

They take four 3.5" drives in neat little caddies, up to 8GB of ECC (or non-ECC) RAM, and are powered by an Athlon II dual-core chip.*** Gigabit ethernet, a swarm of USB ports, one each eSATA and VGA. Two low-profile PCIe slots, one full x16 and one x1. Comes with 1GB of ECC RAM and a 250GB disk, which isn't very much but at least lets you get it up and running right out of the box.

$250 each, including shipping - which is the same price as those Buffalo 4-disk NAS boxes, but a lot more capable. At the same time they're a good bit bigger than a Buffalo, but at the same same time they're maybe one third the size of the mini-tower machines under my desk and reportedly very quiet and light on power.

They'll work fine as file servers or test boxes without cluttering the place up too much.

I was uncertain whether to buy them - I could revive some of my older machines to do the same duties, though they'd be larger, noisier, and more expensive to run - when I found some pretty positive reviews and a 175-page thread and another 194-page thread about them on OCAU. They're apparently quite the item with the hobbyist crowd.

Now I just need to name them. Kirino for one, I think. And... Menma?

* Sorry.**

** Not really.

*** A 1.3GHz low-power Athlon, but that should be fine for what I'll be doing with them.

Posted by: Pixy Misa at

08:09 AM

| Comments (6)

| Add Comment

| Trackbacks (Suck)

Post contains 288 words, total size 2 kb.

Tuesday, August 09

This is Reia. It's essentially Ruby implemented on the Erlang VM, which is great if you're interested in Erlang but hate the syntax.*

Sadly the project has just been abandoned because there are apparently more things not to like about Erlang than its syntax.**

I've known of Reia (and Erlang, of course) for a some time, but I wasn't really interested in them except as curiosities until I started working with RabbitMQ, CouchDB, Disco, and Riak, all of which are implemented (either partly or in full) in Erlang.

Reia - as Ruby in Erlang - seemed like the perfect next step in my Erlang explorations*** but alas, they paved it and put up a parking lot.

* In other words, you're sane.

** There's another, similar project called Elixir, but Reia has a better name and a nicer syntax.

*** Well, Python in Erlang would have been even better, but Ruby is a Python martini - six parts Python to one part Perl - and that's not a disagreeable mix.

Posted by: Pixy Misa at

11:48 PM

| No Comments

| Add Comment

| Trackbacks (Suck)

Post contains 175 words, total size 2 kb.

Monday, August 08

By which I don't mean, since Redis is cool, CouchDB is uncool. More like is CouchDB Yuri to Redis's Kei? Uh, do they complement each other nicely?

Because it sure looks that way to me.

Inspired by this very handy comparison of some of the top NoSQL databases, I've compiled a simpler item-by-item comparison of CouchDB and Redis, and it appears to be that CouchDB is strong precisely where Redis is weak (storing large amounts of rarely-changing but heavily indexed data), and Redis is strong precisely where CouchDB is weak (storing moderate amounts of fast-changing data).

That is, CouchDB seems to make a great document store (blog posts and comments, templates, attachments), where Redis makes a great live/structured data store (recent comment lists, site stats, spam filter data, sessions, page element cache).

Redis keeps all data in memory so that you can quickly update complex data structures like sorted sets or individual hash elements, and logs updates to disk sequentially for robust, low-overhead persistence (as long as you don't need to restart often).

CouchDB uses an append-only single-file (per database) model - including both B-tree and R-tree indexes - so again, it offers very robust persistence, but will grow rapidly if you update your documents frequently.

With Redis, since the data is all in memory, you can run a snapshot at regular intervals and drop the old log files. With CouchDB you need to run a compaction process, which reads data back from disk and rewrites it, a slower process.

Redis provides simple indexes and complex structures; CouchDB provides complex indexes and simple structures. Redis is all about live data, while CouchDB is all about storing and retrieving large numbers of documents.

Now, MongoDB offers a both a document store and high-performance update-in-place, but its persistence model is fling it at the wall and hope that it sticks, with a recovery log tacked on since 1.7. It's not intrinsically robust, you can't perform backups easily, and its write patterns aren't consumer-SSD-friendly. I do not trust MongoDB with my data.

One of the most unhappy elements of Minx is its interface with MySQL - writing the complex documents Minx generates back to SQL tables is painful. I've tried a couple of different ORMs, and they've proven so slow that they're completely impractical for production use (for me, anyway).

MongoDB offered me most of the features I needed with the API I was looking for, but it crashed unrecoverably early in testing and permanently soured me on its persistance model.

CouchDB is proving to be great for the document side of things, but less great for the non-document side. But I was looking at deploying Redis as a structured data cache, and it makes an even better partner with CouchDB than it does with MySQL.

It's really looking like I've got a winning team here.

Anyway, here's the feature matrix I mentioned:

| Couchdb | Redis | |

| Written in | Erlang | C |

| License | Apache | BSD |

| Release | 1.1.0, 2.0 preview | 2.2.12, 2.4.0RC5 |

| API | REST | Telnet-style |

| API Speed | Slow | Fast |

| Data | JSON documents, binary attachments | Text, binary, hash, list, set, sorted set |

| Indexes | B-tree, R-tree, Full-text (with Lucene), any combination of data types via map/reduce | Hash only |

| Queries | Predefined view/list/show model, ad-hoc queries require table scans | Individual keys |

| Storage | Append-only on disk | In-memory, append-only log |

| Updates | MVCC | In-place |

| Transactions | Yes, all-or-nothing batches | Yes, with conditional commands |

| Compaction | File rewrite | Snapshot |

| Threading | Many threads | Single-threaded, forks for snapshots |

| Multi-Core | Yes | No |

| Memory | Tiny | Large (all data) |

| SSD-Friendly | Yes | Yes |

| Robust | Yes | Yes |

| Backup | Just copy the files | Just copy the files |

| Replication | Master-master, automatic | Master-slave, automatic |

| Scaling | Clustering (BigCouch) | Clustering (Redis cluster*) |

| Scripting | JavaScript, Erlang, others via plugin | Lua* |

| Files | One per database | One per database |

| Virtual Files | Attachments | No |

| Other | Changes feed, Standalone applications | Pub/Sub, Key expiry |

* Coming in the near future.

Posted by: Pixy Misa at

11:27 AM

| Comments (4)

| Add Comment

| Trackbacks (Suck)

Post contains 639 words, total size 7 kb.

Sunday, August 07

So I did a bunch of reading on CouchDB, and it seems pretty interesting, and while flawed, it's not flawed in the direction of oops I just lost all your data (I'm looking at you, MongoDB) and it's not like I've found a database without flaws, so it's worth a shot.

Only none of the available Python libraries supports all the functionality of CouchDB, and one of the main ones is just abysmally slow. But on the other hand, one of the main weaknesses of CouchDB - it only has a REST API - means that it's easy to write a library - after all, it only has a REST API.

So I'm doing exactly that. The goal is to have a simple but complete library with the best performance I can manage. It will cover all the REST API calls but not try to do anything beyond that. I've isolated out the actual call mechanism so that it can be trivially swapped out if someone comes up with something more efficient. (Or if Couchbase creates a binary API.)

Soon as I have it mostly working I'll open source it and put it up on Bitbucket or something.

As it stands, you can connect to CouchDB, authenticate, create and delete databases, and read and write records, as well as various housekeeping thingies that were easy to implement. It supports convenient dict-style access

db[key] as well as db.get() and db.set().It's called Settee, unless I think of something better. It's a sort of spartan couch.

And in a simple test of 1000 separate record inserts:

Settee: 3.668s

CouchDB-Python: 41.53s

Either one would be much faster using the batch update functions, but there's no reason to grind to a complete halt when you need to do many smal queries.

(Scrabbling sounds ensue for a couple more hours.)

Okay.

CouchDB-Python is slow specifically for record creates and updates; it seems to be fine otherwise. There's a fork called CouchDB-Python-Curl that is much faster on writes - slightly faster than my code too - and uses significantly less CPU time apparently because it uses libcurl, which is written in C. Only problem there is that libcurl is horrible.

I've tweaked my code now so that it supports both the stylish and elegant Requests module and the simple and functional Httplib2, both of which are miles ahead of Python's core library in functionality and PyCurl in friendliness. PyCurl is still the fastest, though:

| Write/s | Read/s | User | Sys | Real | |

| CouchDB-Python | 24 | 603 | 1.248 | 1.108 | 85.7 |

| CouchDB-Psyco | 24 | 654 | 1.112 | 0.848 | 85.3 |

| CouchdDB-Curl | 422 | 665 | 0.536 | 0.368 | 7.93 |

| CouchdDB-Curl-Psyco | 421 | 711 | 0.576 | 0.452 | 7.93 |

| Settee-Requests | 272 | 418 | 2.704 | 1.564 | 12.4 |

| Settee-Requests-Psyco | 305 | 453 | 1.96 | 1.47 | 11.2 |

| Settee-Httplib2 | 376 | 636 | 0.98 | 0.784 | 8.48 |

| Settee-H2-Psyco | 392 | 687 | 0.6 | 0.77 | 8.48 |

CouchDB-Curl runs only slightly faster than Settee-H2, but uses about half the CPU time, which is a nice advantage. Running with the Psyco JIT compiler makes up much of the difference - speeding up Settee while increasing the system time on CouchDB-Curl. Can't do anything for poor CouchDB-Python, though. I think it's been Nagled, though I don't know why it only affects that part of the test.

Update: Bulk insert of records, 100 at a time, single-threaded:

Settee-Rq: ~7000

Settee-H2: ~7700

That's healthy enough. Still trying to get the bulk read working the way I want it, though.

Update: Okay, so there's a fair bit of overhead in the API - write queries seem to take a minimum of 2.5ms and read queries at least 1.5ms. (MySQL queries get down to about 0.5ms, and Redis is even faster.)

But the underlying data engine suffers from no such limitations: I'm firing 20 million small (~250 byte) JSON objects at it, single-threaded, batches of 100, and it's happily loading them at about 6,000 records per second, 5 million records in. That's with CouchDB at about 60% of a CPU, Python at about 6%, and disk (6-disk software RAID-5) at about 10% busy.

That's a pretty good showing, though I'm not stressing it at all yet - the data is being loaded in key order. We'll have to see how it copes with multiple compound indexes next.

Posted by: Pixy Misa at

05:52 PM

| Comments (3)

| Add Comment

| Trackbacks (Suck)

Post contains 698 words, total size 7 kb.

Thursday, August 04

RabbitMQ gets the exceedingly rare Doesn't Suck award for not only doing what it's says it does, but allowing you to look at it and see that yes, it is inded doing what it says it does.

After a couple of years of ActiveMQ, this is very refreshing.

If you have a couple or twelve Python applications and you need to ship messages between them cleanly and reliably, be it a few thousand a day or (like me) half a billion, RabbitMQ and the Kombu library are the way to go.

Posted by: Pixy Misa at

08:26 PM

| No Comments

| Add Comment

| Trackbacks (Suck)

Post contains 92 words, total size 1 kb.

Doh.

When I last looked at CouchDB, I somehow completely missed the point of views. They're not a query mechanism, they're an index definition mechanism.*

If they were a query mechanism, they'd suck. As an index definition mechanism, though, they do exactly - exactly - what I've been looking for: They look into the record structure and generate appropriate index entries based on any criteria I can think of. Thus, my need for a compound index on an array and a timestamp is trivially easy to implement.

Just how good CouchDB is beyond that feature I don't know yet, but I'm sure going to find out.

Couple of other points: The last time I was looking I was dubious about using Erlang-based software; my experience with RabbitMQ since then has improved my opinion of Erlang considerably. And CouchDB uses a consumer-SSD-friendly append-only B-Tree structure for its indexes, making it cheap to deploy a very high-performance system (for small-to-middling databases, i.e. in the tens to hundreds of gigabytes). And, because it's append-only, you can safely back it up just by copying the files. I do like that.

Oh, and this: The couchdb-python package comes with a view server to allow you to write views in Python instead of JavaScript. That's kind of significant.

* Well, they're more than that, but that's the key distinction. Views don't query the data; they define a way to query the data - including the necessary index.

Posted by: Pixy Misa at

05:20 AM

| No Comments

| Add Comment

| Trackbacks (Suck)

Post contains 243 words, total size 2 kb.

54 queries taking 0.247 seconds, 382 records returned.

Powered by Minx 1.1.6c-pink.