Saturday, January 22

Just hid my earlier post because I found a bug in one of my benchmarks, and went on a bugsplatting and optimising spree.

I'm back to working on Minx 1.2. There are two main things that I've wanted to fix for ages.

First, the common database queries for blog index pages, which often require complex joins and sorts - very well optimised joins and sorts, hence the speed, but common queries should be in index order wherever possible and not sort at all.

Second, the tag engine, which needs to populate the tag table with vastly more data than you will ever use - approximately 300 tags for each comment, for example, of which the typical comment template uses 6.

The first problem was what's had me looking into every kind of database under the Sun.* Since all databases suck, and since it turns out that Python is too slow to take over the job** I'm back to MySQL and its relatives for the main datastore - with the likelihood of offloading search to Xapian and using Redis as a sort of structured cache.

Speaking of structured caches, that's the fix for the query problem. It's called the Stack Engine, and basically it prebuilds and maintains all the standard queries for all your folders and threads and stores them so that you can page through them without ever having to do an index scan, much less a sort. The initial version uses MySQL to store the stacks, but it can just as easily (and more efficiently) be handled by Redis.

This will add a few milliseconds when you post a comment, but significantly reduce the query time for displaying a page.

The other major change is in the Template Engine. Minx has a lot of tags - a lot of a lot of tags; as I mentioned, there are currently about 300 tags just for a comment, and that's set to double in the new relase.

However, I've finally had the breakthrough I needed and found an elegant way to make most of those tags vanish. In 1.1.1 I set many of the sub-tags to lazily evaluate - there's just a placeholder in the tag table until you actually use that tag. If you never use it, the function never gets called.

The new engine takes this a two three steps further. First, for the most part it automatically maps the data from database into the tag table without me having to write hundreds of lines of fiddly code - or even set up lists of fields names. And it automatically copes with schema changes too, where the old version needed code changes and schema changes to be carefully synchronised.

Second, it completely virtualises about 80% of the existing tags. There's not even a placeholder anymore; the tag engine looks, finds that the tag you are using doesn't exist but that it can be calculated from a value that does, calls the appropriate and pops the result into your page. This cuts down the size of the tag table by 80% - and cuts down the time spent building it by 80% as well.

The third part is the code I've been hacking on most of the night, the new data mapper. It can now pull more than eight million*** fields a second out of the database and into the tag table ready for use - effectively forty million, since 80% of the tags are now virtual. The tag table itself is also significantly improved, so that adding and removing records (as your template runs through a list of posts or comments, for example) is - um, I'll have to go back and benchmark that part, but a hell of a lot faster.

So (a) these two modules make things run a whole lot faster, especially for big sites like Ace's, which I want to bring across to Minx ASAP, and (b) they make the code much cleaner, wiping out two existing modules full of boilerplate and making the rest of the code much easier to maintain.

So. Good. Now I just need to change all the other code to use the new modules....

* Well, that and my day job, where I need to store and index a hundred million posts a day.

** Which will be the subject of another post, you can bet.

*** Latest benchmark run is set at 4,458,841 fields per second in pure Python, 8,515,711 with Psyco.

Posted by: Pixy Misa at

11:46 AM

| No Comments

| Add Comment

| Trackbacks (Suck)

Post contains 746 words, total size 5 kb.

Friday, January 21

I've been reading up on SQLAlchemy since my earlier adventures. The developer's blog post on profiling convinced me that he knew what he was doing, and yet my benchmarking demonstrated that SQLAlchemy was unacceptably slow.

Clearly I was missing something.

What I was missing was what SQLAlchemy does at the high level - missing it because I wasn't looking at that level at all. But the way my code was written, based on the respective tuorials from SQLAlchemy and Elixir, it was using the high level functions.

Which automatically map your objects to the database and keep them in sync.

So, basically, you can define a bunch of classes, define how they map onto the database tables, and just mess about with the objects and leave SQLAlchemy to persistificate them for you to any arbitrary SQL database.

Which is of course horribly complicated, and, in an interpreted language such as Python, kind of slow.

And not what I want to do right now. Though it might come in handy at some point.

But - SQLAlchemy is split into two modules: The ORM (object-relational mapper), the high-end slow part, and the Core, a data abstraction layer, that just lets you do queries against different SQL databases without worrying about the fact that PostgreSQL and SQLite support INTERSECT but MySQL and Ingres don't. Or do inserts of complex records without having to painstakingly count the %s in the query string and match them up against your parameters.

Which is exactly what I want.

And if I want to use the ORM in some self-contained modules where performance isn't critical, I can do that too.

So I'm off to run my benchmarks again and see what shakes out.

Update: (a) Still slow. (b) Kind of horrible.

Posted by: Pixy Misa at

12:43 AM

| No Comments

| Add Comment

| Trackbacks (Suck)

Post contains 300 words, total size 2 kb.

Thursday, January 20

Target database acquired. Analysing.

Open source... Confirmed, GPLv2.

Supports UNION, INTERSECT, EXCEPT... Confirmed.

Supports full-text search... Confirmed.

Supports XML... Confirmed, SQL extensions, XQuery, XPath.

Supports arrays... Negative Maybe?

Supports user-defined types... Confirmed.

Supports geospatial data... Confirmed-ish.*

Supports graph structures... Confirmed, RDF, SPARQL.

Supports Python... Confirmed, both as client and scripting engine.

Supports HTTP, SMTP, POP, IMAP, NNTP... Confirmed. Wait, what? I thought this was a database.

Openlink Virtuoso could bear further investigation. The Openlink Blog looks satisfyingly full of technical stuffs.

I've also been taking a look at Cubrid, another interesting where-did-that-come-from** open source database.

* You can find a handy feature matrix here. The open source version has all the features of the commercial release, except for clustering, replication, and geospatial data. The lack of replication in the open source version is unfortunate, but in my projects I tend to go for application-level replication anyway.

Funnily enough, I was just looking at the free (as in beer) version of Informix, and that does feature replication and clustering - only two nodes, but what do you want for free?

** Korea, as it turns out.

Posted by: Pixy Misa at

02:12 AM

| Comments (6)

| Add Comment

| Trackbacks (Suck)

Post contains 187 words, total size 2 kb.

Tuesday, January 18

When you set the configuration file or command line parameters of a C program to tell it that it can use X amount of memory, it will use X amount of memory, and no more.

When you set the configuration file or command line parameters of a Java program to tell it that it can use X amount of memory, it will do whatever the hell it wants.

This is not intrinsic in the respective languages; rather, it seems to be an emergent property.

Posted by: Pixy Misa at

11:18 PM

| Comments (4)

| Add Comment

| Trackbacks (Suck)

Post contains 90 words, total size 1 kb.

Sunday, January 16

Kyoto Cabinet is the successor (more or less) to Tokyo Cabinet, and like it, is a fast, modern, embedded database, not unlike Berkeley DB.

How fast can we stash movies in that, I wonder?

| Method | Elapsed | User | System |

| Kyoto Cabinet/JSON | 1.23 | 1.18 | 0.05 |

| Kyoto Cabinet/MsgPack | 1.65 | 1.19 | 0.45 |

| SQL Individual Insert | 10.4 | 4.1 | 1.8 |

| SQL Bulk Insert | 2.1 | 1.6 | 0.04 |

| SQL Alchemy | 33.2 | 26.4 | 2.1 |

| SQL Alchemy Bulk | 33.4 | 26.8 | 1.9 |

| Elixir | 35.8 | 28.7 | 2.3 |

Pretty darn fast, is the answer. That's a little unfair, since Kyoto Cabinet is an embedded database, not a database server.

(Interestingly, SimpleJSON outran MessagePack in this test, and for a very odd reason - system time went up ninefold.)

Oh, and one other thing - the random movie generator itself takes about 1s of that 1.23s. So it's not 30x faster than Elixir here, it's more like 100x faster.

Kyoto Tycoon is a server layered atop Kyoto Cabinet, so I'm off to tinker with that next.

Update: 0.43 seconds to create a list of 100,000 random movies; 0.52 seconds to pack them all as JSON; 0.11 seconds to write them to a BTree-indexed Kyoto Cabinet database. I've seen benchmarks that put Kyoto Cabinet at over a million records per second, and here it is running in a virtual machine under Python, single-threaded, at ~900,000. So, yeah, it's quick.

Update: Teehee!

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

11509 pixy 17 0 512m 82m 3932 S 237.4 0.2 0:39.00 python Why yes, that Python program is using 2.37 CPUs.

It looks like two (busy) threads are the limit for Kyoto Cabinet, though; at four threads throughput actually went down by about 20%.

Posted by: Pixy Misa at

08:49 AM

| Comments (1)

| Add Comment

| Trackbacks (Suck)

Post contains 289 words, total size 4 kb.

Friday, January 14

Update: I think I've worked out why my benchmark results were so bad, and I'll be modifying my code and re-running them. Stay tuned!

Well, possibly 1993.

Writing SQL is one of my least favourite tasks. SQL Alchemy and Elixir promise to magic this away (most of the time) by providing an object-relational mapping and an active record model.

What is now called the active record model is what I used in my day job from 1988 to 2006. It's good. In fact, anything else sucks. What the active record model means is that you interface with your database as though your data were yours, and not some precious resource doled out to you by your electronic betters as they saw fit.

What's not so good is that the performance I'm getting is more 1988 than 2006, let alone 2011.

I've created a database of 100,000 random movies (based on the Elixir tutorial). That took around 30 seconds. Then I went all Hollywood and created remakes of them all - that is, I updated the year field to 2012.

As a single statement in MySQL, that took 0.38 seconds.

As 100,000 individual raw updates fired off through the MySQL-Python interface (which is a horrible way to do things), 9 seconds.

Via Elixir... 230 seconds.

No. Just no. That's crap.

You can show the SQL that Elixir/Alchemy generates, and it's the same as what I'm doing manually. The SQL is fine. It's the rest of the library that's a horrible mess.

It takes 5.8 seconds to read those 100,000 movies in via Elixir.

It takes 0.34 seconds to read them in via MySQLdb and convert them into handy named tuples.* (In essence, inactive records.)

Okay, hang on one second. I'm using the less-well-known Elixir here on top of SQL Alchemy. How much of the damage is Elixir, and how much is SQL Alchemy itself?

Let's see! This is my first benchmark, creating 100,000 random movies:

| Method |

Elapsed |

User |

System |

| Single insert |

10.4 |

4.1 |

1.8 |

| Bulk insert |

2.1 |

1.6 |

0.04 |

| SQL Alchemy |

33.2 |

26.4 |

2.1 |

| SQL Alchemy Bulk |

33.4 |

26.8 |

1.9 |

| Elixir |

35.8 |

28.7 |

2.3 |

(The bulk insert is not directly comparable; it's just the sane way to do things.)

Elixir is described as a thin layer on top of SQL Alchemy, and that appears to be true; the overhead is less than 10%, and it's quite elegant. The overhead of SQL Alchemy, on the other hand, is ugh.

This is why I spend so much time testing performance. I spend a lot more time benchmarking than I do actually writing code - or testing it. Because if your code has a bug, you can fix it. If your performance is broken by design, you have to throw the whole thing out and start again.

I know the SQL Alchemy guys aren't idiots, and I know they've spent considerable time profiling and optimising their code. What I don't know is why it's still so horrible.

The pre-release 0.7 code is supposed to be 60% faster, but I'm looking for 600% just to reach something acceptable. Is that even possible? I don't know, but I'll try to find out.

Update: Retested SQL Alchemy with bulk inserts (using add_all()). No improvement.

* 300,000 records per second is pretty quick, though keep in mind that these are tiny records - just four fields.

Posted by: Pixy Misa at

04:04 AM

| Comments (6)

| Add Comment

| Trackbacks (Suck)

Post contains 564 words, total size 6 kb.

Thursday, January 13

At my day job, we need to encode about 3000 incoming messages per second and send them whizzing around our network, to be plucked from the stream and decoded by any or all of a couple of dozen subsystems. No serialisers and deserialisers are something of a hobby of mine.

Mostly, we use JSON. (And thank you JSON for all but killing off XML. XML sucks and deserved to die.)

Every so often I run a little bake-off to see if there's anything significantly better than a good implementation of JSON. Until now there hasn't been anything with equivalent ease of use and significantly better performance.

Until now:

| Codec | Dump | Load | Bytes |

| JSON | 2.37 | 2.19 | 1675 |

| Pickle | 3.03 | 2.37 | 1742 |

| MessagePack | 1.37 | 0.29 | 1498 |

| MessagePack/List | 1.60 | ||

| MessagePack/Schema | 0.38 | ||

| YAML | 95.27 | 78.04 | 1631 |

| BSON | 2.85 | 1.05 | 1735 |

(Times in seconds for 100,000 records.)

No, it's not YAML. YAML is nice for easy-to-edit config files, but it's lousy for encoding and shipping lots of data. It's too flexible, and hence too slow.

MessagePack, on the other hand, is a compact binary JSON-style encoding. It's about twice as fast as JSON (I use SimpleJSON, a fast C implementation) for dumping data, and up to ten times as fast for loading it. In situations like a database where reads can be 100x as common as writes, that's a huge win.

One thing I noticed is that MessagePack for Python returns arrays as tuples (which are immutable) rather than lists. There are two ways to fix this in my use case. First, MessagePack itself allows you to provide a callback function used when creating arrays, so you can tell it to create lists instead. Second, I could use an advisory schema to look at the data after it's been unpacked and make corrections as needed.

As you'd expect, one of these methods takes a lot longer than the other and leaks memory like mad. Guess which one.

Oh, and the other thing - in my testing, I tried this out in both native Python and my usual environment of Python 2.6 with the Psyco JIT. In the latter environment, I was doing callbacks from the Cython implementation of the MessagePack wrapper - that is, statically compiled Python - to the Pysco list-creation wrapper function - dynamically compiled Python. And it worked. I thought at first that that was causing my memory leak, but the same leak showed up in native mode.

Update: Added BSON, using the C implementation in PyMongo (which provides a Python wrapper for the C library and a pure-Python version). BSON is comparable with JSON and Pickle on encoding, and falls between JSON and MessagPack on decoding.

The advantage of BSON (to me) is that it natively supports dates and times, which JSON and MessagePack do not. Pickle supports almost any Python object, but that's a problem in itself, and it's Python-specific. YAML supports dates, but it's far too slow for intensive use.

Slight disadvantage of BSON is that everything has to be a document - a Python dict - at the top level. You can't encode an array (list, tuple, etc) without wrapping it in a dict.

Posted by: Pixy Misa at

11:30 AM

| No Comments

| Add Comment

| Trackbacks (Suck)

Post contains 533 words, total size 5 kb.

Tuesday, January 11

It's not a database, it's a toolkit for building databases.

When evaluating a server for mee.nu, it really came down to MySQL or PostgreSQL. MySQL won because of the difference in how you set up full-text indexing.

With MySQL, you put a full-text index on your field, then you use a MATCH ... AGAINST queury.

With PostgreSQL (at the time), you created an extra field in your table to hold the positional terms vector, which you populated by running one of a number of functions over your text field(s) depending on your precise needs, then you created either a GiST or GIN index over the vector, taking into account the tradeoffs of size, indexing time, and query time. Then you used one of a number of weird comparators like && or <@ on your search string to run your query.

Advantage of MySQL: You create the index. You're done.

Disadvantage of MySQL: You have a choice of full-text indexes or concurrency. Four years after I first did this evaluation, this is still true. If you have a full-text index in MySQL (or the MariaDB fork), then a single slow select will lock out all inserts, updates, and deletes on that table. And vice-versa. There are alternate table types that don't have those concurrency issues. They don't support full-text indexes.

Advantage of PostgreSQL: You can do it however you want, and you don't lose other functionality in the process.

Disadvantage of PostgreSQL: You have to work out how you want to do it. Also, at the time, you had to duplicate all the text in a separate field first, and then create the index. This has now been fixed in a very powerful and general way: You can create an index based not on a field, but on some function run against that field (or any combination of fields).

PostgreSQL seems to be very good at providing powerful, generalised solutions.

MySQL is very good at providing solutions that keep 95% of the people happy and are easy to use.

What PostgreSQL needs is some syntactic sugar. What MySQL needs is a whole new storage engine. I'm making no bets on who gets there first.

Posted by: Pixy Misa at

04:59 PM

| Comments (4)

| Add Comment

| Trackbacks (Suck)

Post contains 364 words, total size 2 kb.

Friday, January 07

Micron have just launched a new range of consumer-grade SSDs, the C400 series. These follow on (naturally) from their earlier C300 range, but use Micron's new 25nm MLC flash technology (jointly developed with Intel) instead of the older 34nm chips.

Since 252 is roughly half of 342, this should mean that Micron can pack about twice as much flash into a drive, and indeed they do; the top model of the new range is 512GB compared to 256GB previously. Performance is also up; quoted durability at the drive level has stayed the same at a 72TB lifespan* though the lifespan of the individual flash cells is shorter at 3000 vs. 10,000 cycles.

The new drives are also a little cheaper, at around $1.60 per GB vs. a previous $2 to $2.50.

What's really interesting though is that they are producing both 1.8" and 2.5" models (regular notebook drives, and most current SSDs, are 2.5"). The largest capacity is available in both sizes - even though the physical volume of a 1.8" drive is approximately 1/4 that of a 2.5" drive.**

Those 1.8" 512GB drives provide the highest storage density of any disk drive available today. And transfer rates up to 415MB per second, matching the speed if not the capacity of the 5-disk RAID array we had in our previous server, at about 1/100th the size.

Servers are steadily moving to 2.5" drives, because commercial applications often need lots of disks for I/O performance, leading to things like this 72-bay server chassis from Supermicro:

(If you're counting, it has another 24 bays at the back.)

I can't wait to see something like that, optimised for 1.8" drives...

* That's between five and ten the quoted lifespan for Intel's M-series drives, but still low for a busy database server. Our current server has done 3TB of database writes so far; if I'd deployed that on a single 80GB Intel X25-M (instead of the more expensive but far more durable X25-E's) it would be calling in to schedule its mid-life crisis about now. In reality I think Intel's numbers are likely to be extremely conservative, but I don't like losing databases.

** Disk drives are like ISO paper sizes. You'll find that two 2.5" drives turned sideways fit neatly on top of a 3.5" drive, and the same goes for 3.5" vs. 5.25". Probably true for 5.25" vs. 8" too, but I don't have an 8" drive handy to check.

Posted by: Pixy Misa at

04:52 AM

| Comments (1)

| Add Comment

| Trackbacks (Suck)

Post contains 413 words, total size 3 kb.

Monday, January 03

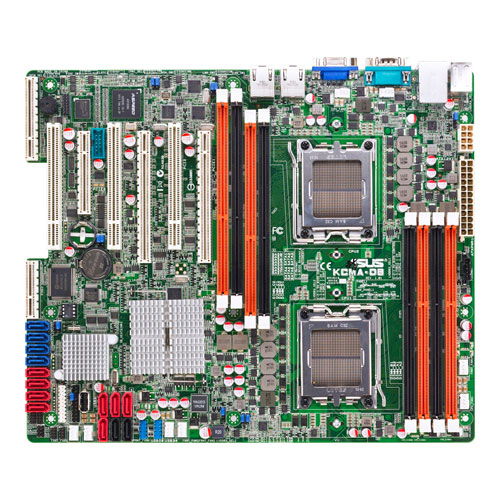

This is shiny:

What it is, is an Asus-built ATX dual-socket Opteron 4100 motherboard. The ATX part means it's small enough to fit in a standard desktop case; the Opteron part means it's a proper server/workstation motherboard; the 4100 part means that it's a low-end server/workstation motherboard, but that means that the CPUs start at about $100. And the motherboard itself is under $300, so you can build yourself a powerful system very inexpensively.

By comparison, the Xeon X5670 processors in the server currently running this site retail for nearly $1500 each.

It's not without its flaws, though. Those eight SATA ports at the far left don't work without an optional proprietary RAID module, which start at $140.* And though it has a dedicated server management port on the back, the server management module itself is a $60 option, bringing the total to around $500. That brings the price close to the $600 for this Tyan motherboard, which has the same feature set but far better expansion options.

However, the Tyan motherboard won't fit in a standard desktop case - nor, as it turns it, in the server case I was planning to use.

Bugger.

The Asus board would be great for my next home Linux box - it will give me a cheap platform for 8 to 16 cores and 32GB of RAM. It would also be perfect for a mu.nu server that I own rather than rent; the sole difference being that I'd use ECC RAM in that one.

For Windows, while all those cores and all that RAM would be lovely, the limited expansion is less so. The board has just two USB ports on the back - USB 2, not 3; no Firewire, no audio at all, no eSATA, and server-grade (i.e. pathetic) built-in video. So it would need, at a minimum, a video card, a sound card, a SATA controller (6 working ports ain't enough) and a USB 3 card. Since there are only 3 PCIe slots, the sound card is going to be PCI.

Would be PCI, if I were planning on buying four. One. Planning on buying one. Clearly I'm not going to buy one for Windows and one for Linux and one for mu.nu and one for mee.nu. I mean, not today. The stores are closed.

Update: Oh, wrong case. Supermicro do have very similar, and only slightly more expensive, cases that support the larger size of the Tyan motherboard mentioned above. So we're good to go there. Still waiting for CentOS 6 and a stable OpenVZ kernel for it, and for the new generation of Intel SSDs, before I start plunking down cash. The one advantage with renting servers is that I can change my mind at any time. Once I buy hardware, I'm stuck with it unless it actually doesn't work.

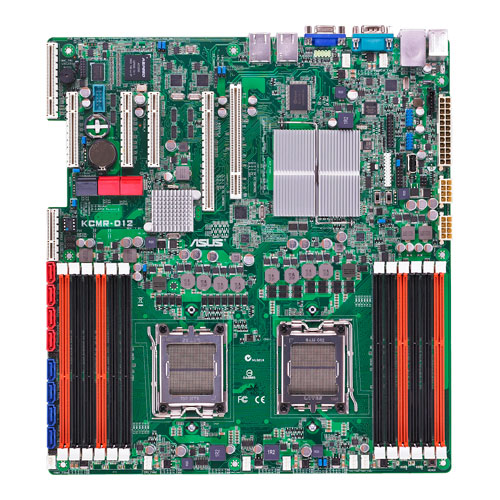

If the above KCMA-D8 ($310 from Acmemicro, who built our new 100TB server at my day job) isn't enough, there's the KCMR-D12 for $379:

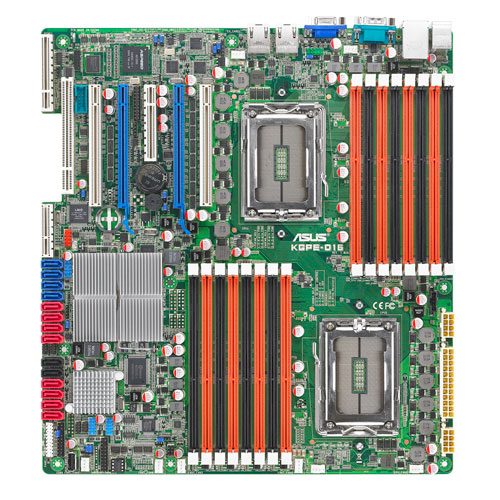

Or the KGPE-D16 for $445:

Those won't fit in a standard desktop case, obviously; they're quite a bit bigger. They take the same RAID and server management modules, and a whole lot more RAM - 12 and 16 DIMMs respectively. The D16 can actually accept 16 cheap 4GB unbuffered modules if you really want to do that.

The D8 and D12 are Opteron 4100 boards, and support 4-core and 6-core CPUs, with 8-core coming later this year; the D16 is an Opteron 6100 board, supporting 8-core and 12-core CPUs, with 16-core coming later this year. The 8-core CPUs are still reasonably priced: A 4180 6-core 2.6GHz CPU costs $206; a 6128 8-core 2.0GHz CPU costs $286. And the Opteron 6100 supports 4 processors, for a cheap 32-core machine.

* There is an advantage to the proprietary RAID card, though - it's small enough to fit upright in a 1U server case. You don't even need to muck around with riser cards.

Posted by: Pixy Misa at

04:06 PM

| No Comments

| Add Comment

| Trackbacks (Suck)

Post contains 661 words, total size 5 kb.

56 queries taking 0.2351 seconds, 387 records returned.

Powered by Minx 1.1.6c-pink.