Ahhhhhh!

Sunday, August 30

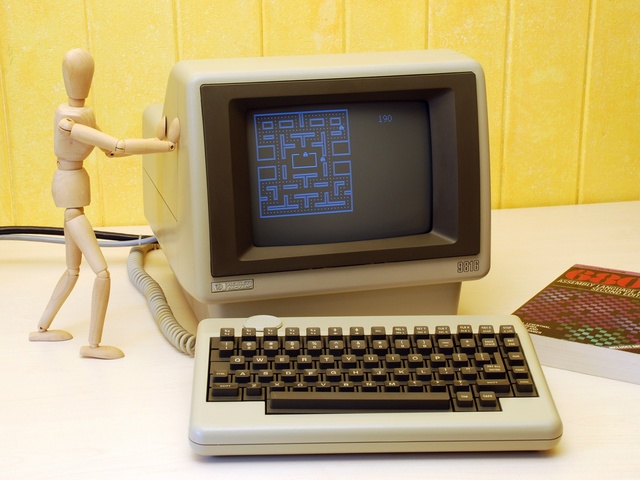

Dream A Twelve-Bit Dream Edition

Tech News

- Went out to the shops this evening. I self-isolated for a couple of weeks because I caught a cold - somehow - then for another week because I was feeling lazy, so it was time to emerge from my burrow.

Things were pretty normal. No shortages, except for the stuff that was half price, and no purchase limits. About a third of the people were wearing masks, though about a third of those were only sort-of wearing masks.

- I've nearly completed the programming model for the 10 bit Imagine and 12 bit Dream systems. The 10 bit model is positioned mid-way between the Z80 and the Z8000, with a dash of 6309 because the 6309 is really nice.

The 10 bit mode is a proper subset of the 20 bit mode, so there's no switching between the two; 10 bit code runs unaltered in 20 bit mode, and if you need to add a couple of 20 bit instructions here and there it just works.

The 12 bit system is a design I dreamed up a long time ago, half-way between the 6809 and the 68000. It has registers from A to Z, just because I could.

They're deliberately similar so I can easily target both with the same compiler. I haven't yet looked into 9, 11, or 13 bit designs. The 13 bit system will be as weird as logically possible, perhaps a cut-down iAPX 432 or Linn Rekursiv.

But that will be later.

I also need to figure out the rest of the hardware. I have a handle on the video controller, thanks to the existence of the Atari ANTIC chip and the NEC 7220. But I haven't yet figured out what exactly to do for sound. I want wavetable synthesis, because that's a real thing (the Apple IIgs had it) and purely digital, so I'm not faking an FM synth like the Commodore SID.

The first couple of commercially available DSPs in the early 80s (the NEC 7720 - just to confuse you - and the Intel 2920) didn't have hardware multiply and ran like snails. But a snail might be good enough here. Let's see: 10 voices, stereo, times a sample rate of whatever my imaginary HSYNC is... 18.75kHz, that's fine. 375,000 samples per second. So I would need to sustain one 10x10 multiply every eight cycles at 3MHz. That's not possible with software shift-and-add, but is probably feasible in hardware, if the hardware is feasible.

Oh, right, the 8086 had hardware multiply. How fast did that... Oh. A minimum of 70 cycles for an 8x8 multiply. Might need to rethink this part.

Update: The Z8000 was about twice as fast as the 8086, completing a 16x16 multiply (rather than 8x8) in 70 cycles.

The TMS32010 came out in 1983 and included a hardware multiplier that could complete a 16x16 multiply in 200ns - one cycle at 5MHz. Only problem is that it cost $500 at a time when an entire C64 cost $300.

By 1987 it was being used in toys, but in 1983 TI shipped a total of just 1000 units.

- When I was looking into doing this in hardware last year I considered using real components - 6809, Z80, and/or 6502. When looking up component availability for these 40-year-old chips, one name kept popping up: Rochester Electronics.

I'd never heard of them and wondered if they really had all this old stock. Turns out yeah, they kind of do. (Wikipedia)

They have over 27 billion components in stock, counting both complete devices and unpackaged dies. They also have a license to manufacture some old chips from the original designs, including the 6809.

- The Radeon RX 5300 sounds like it should be terrible but probably is just fine. (Tom's Hardware)

The 5700 is their mid-range gaming card - AMD don't really have a high-end gaming card in their current lineup, with Big Navi yet to make an appearance - with the 5600 in the low-mid range and the 5500 for entry-level gaming.

So two notches below entry-level means bad, right?

Maybe not. Seems to be a cost-reduced 5500, with 3GB GDDR6 RAM vs. 4GB, but the same 22 CUs. The current Ryzen 4000 APUs by comparison have 8 CUs, and they're just fine for light gaming, so this won't be bad at all.

Looks like it missed one mark, with a 100W TDP. At 75W it could run on PCIe slot power alone; instead it will need an additional 6-pin power lead.

- Samsung have officially announced their 980 Pro SSDs. (WCCFTech)

These are PCIe 4.0 models that really use the PCIe 4.0 bandwidth: Up to 7GB/s reads. up to 5GB/s writes, up to 1M IOPS.

Maximum capacity is only 1TB, which is kind of dumb. Are these MLC? Is that wh... Nope, TLC. Or as Samsung calls it, "3-bit MLC".

- Hacker News has fallen over. So no quirky little items for you today. (A lot of it makes its way to Reddit as well, but it's harder to find there.)

- Google wants to break the web. (Brave)

I mean, so do I most days, in the sense of throwing a virtual brick in its direction, but Google could actually screw things up in a major way.

This is about the big G's proposal of Web Bundles which are bundles of... Stuff.

The benefit of this is that one pre-compiled bundle can be downloaded faster than a hundred or two hundred pissy little files. The front page of Reddit, for example, makes 158 requests even with ads and trackers blocked.

The downside of this is that we already had this and it was called Flash.

Ratchet and Clank Extended Gameplay Trailer of the Day

This looks amazing. But also kind of dull. But then I'm not the target audience.

It does effectively show off the PS5 hardware. And the Xbox Series X is even more powerful, as far as graphics rendering goes.

Posted by: Pixy Misa at

10:10 PM

| Comments (10)

| Add Comment

| Trackbacks (Suck)

Post contains 1056 words, total size 8 kb.

With Ten Bits You Get Egg Roll Edition

Tech News

- The Eiyuden Kickstarter is coming down to the wire.

With just over an hour to go, will they be able to make it to their... Hang on... 45th stretch goal?

Yeah, this one has been a success.

Update: They made it.

- Stop trying to make Arm servers a thing. They are not... Oh, you stopped. (Serve the Home)

Marvell has cancelled the general release of their Thunder X3 CPU. They'll continue to develop for custom and embedded servers, but they've given up trying to take on AMD for now.

- The Lenovo Yoga 7 Slim is a pretty good laptop. (Tom's Hardware)

Though Lenovo really only did two things:

1. Use an AMD chip.

2. Not fuck up too badly.

Apart from the Ryzen 4800U it's nothing special, but it mops the floor with its Intel-based competition. That may change when Tiger Lake arrives, but right now, AMD owns this space.

Two slight problems with it: First, it lacks the Four Essential Keys, and second, you can't buy it anyway.

- Two trillion dollars buys a whole lot of fuck you.

I can think of less stressful ways to earn a living than developing software for iOS. Juggling electric eels, for example.

- Apple pulled the plug on Fortnite. (Tech Crunch)

You can no longer get it from the App Store, and Epic's developer account has been zorched. Epic did get an injunction against Apple forbidding them from taking the same action against Unreal Engine for now.

- JetBrains also has a programmer font.

Those ligatures look like a really, really bad idea though. A ligature for ≥ sounds great until you realise that ≥ is its own distinct Unicode character, and you can no longer tell the difference between working code and line noise.

- A malloc Geiger counter. (GitHub)

That is a really cool idea. I'll put a hook into my virtual machine to do that sort of thing. A click every time a virtual interrupt happens, or a display list instruction is executed. With suitable prescaling you'll be able to hear how smoothly your code is running.

In fact, I might build the hook into the emulated machine rather than the emulator. What would the display list processor and the sound chip need to be able to do that? If the list processor could trigger interrupts on the other cores - that would do it, and be hugely useful generally. Yes.

- Objective-Rust. (Belkadan)

For when you want absolutely everyone to hate you.

Posted by: Pixy Misa at

01:13 AM

| Comments (1)

| Add Comment

| Trackbacks (Suck)

Post contains 441 words, total size 4 kb.

Saturday, August 29

Ambulatory Solifugid Edition

Tech News

- Is it reasonable to include a video controller co-processor like the Amiga's Copper in a 1983 design? As it happens, yes. (Wikipedia)

The same basic functionality was present in the Atari 400 and 800 as early as 1979, and gave the Atari 8-bit range (including the 5200) a lot of their flexibility.

And it's very easy to emulate those functions - as long as the video emulator respects its virtual registers, all the co-processor needs to do is write to them.

So you can set it up to switch from 32-colour mode to 512-colour mode to text mode to cell mode to Amiga-style HAM mode to Apple IIgs-style fill mode on a line-by-line basis.

Reading up on the Atari architecture I noticed a number of criticisms of Atari Basic. While the criticisms were not unfounded, the whole thing fit in an 8k ROM cartridge. "Hello, world." doesn't fit in 8k these days.

- Speaking of Elite - which we were - Elite Dangerous: Odyssey is due next year. (WCCFTech)

This is a tactical expansion that lets you land, leave your ship, and shoot the bad guys in person.

- Google is removing fediverse apps from the Play Store on the grounds that people are saying mean things on the internet. (Qoto)

Hacker News thread here.

- Apple is back to playing notice me, senpai with the DOJ. (ZDNet)

You are not permitted to work around the 30% cut Apple takes of every transaction.

You are also not permitted to mention the 30% cut Apple takes of every transaction.

- I forgot that this little beastie existed.

That is the HP Series 200 Model 16. Introduced in 1982, it's an 8MHz 68000-based system with 128k to 512k of RAM. It's usually seen with its dual 3.5" floppy expansion - one of the first products to use 3.5" floppies - but all the system logic is packed into that little monitor unit. The floppies reportedly ran at 600 RPM - twice as fast as normal - though I haven't found documentation to confirm that.

The screen is only 9", monochrome, and 400x300 (though text mode may have had a higher effective resolution), but that aside it was an impressive system for '82. It was not, of course, cheap, starting at around $4000.

Posted by: Pixy Misa at

02:39 AM

| Comments (4)

| Add Comment

| Trackbacks (Suck)

Post contains 397 words, total size 3 kb.

Friday, August 28

Where Oh Where Have My GAA-FETs Gone Edition

Tech News

- How much memory did home computers realistically have in 1983? The C64 came out in 1982, so that's one datapoint. The C128 came out in 1985.

But the Atari 1040ST came out in 1986. The Amiga 1000 in 1985 had 768K with the 256K expansion module. By the time I bought mine at the start of 1987 that shipped as standard but I don't know when that started.

If you look up the specs it will say that it came with 256K on board plus 256K on the expansion module, but that's not quite true. The OS ROMs weren't ready at launch, or indeed 18 months later, and the system came with an extra 256K of RAM instead so you could load what should have been there from a "Kickstart" disk. Installing the ROMs - kits became available in 1987 - turned that 256K into usable fast RAM.

I'm not sure if the A1000 ever shipped without that extra 256K of RAM, though the A500 shipped with 512K RAM and working ROMs.

So 128K in 1983 and 256K in 1984 is not entirely unreasonable. By mid-85 256K was something you could patch into hardware because the software wasn't ready.

Here's a handy collection of old Bytes on Archive.org.

I found the hard data I needed in - of all places - the New York Times. In June 1983 - dead centre on my imaginary time window - 256k DRAMs were sampling but not yet in production, and 64k chips were $4.50 each.

So 128k would run $72, and 256k $144. Plus another 25-50% if we're using 10-bit bytes, depending on whether we're using x1 or x4 chips. By comparison the C64 launched at $599 but the price came down pretty fast.

Since 256k chips were sampling in June '83, I can reasonably posit that the Imagine 1000 - which seems like as good a name as any for a fictitious 10-bit system - was designed from the start to be upgraded from 64k to 256k chips.

Using 3 4416 chips per bank to give 10 bits plus parity, 24 chips could provide the original system with 128k in total, while the Model B could come with half that number of chips but double the memory. And the Model A could be upgraded to a maximum of 512k with a simple chip swap.

That works. Why did no-one make this thing? Not that I had remotely that much money as a kid...

Update: In the March '83 issue of Byte there are adds for 64k memory upgrades (with parity) for $50, and the C64 could be found for $299 after rebate.

- TSMC's 3nm node will stick with FIN-FETs, with GAA pushed back to 2nm. (AnandTech)

I don't remember if TSMC had previously said that 3nm would be GAA, or just spoken of 3nm and GAA in the same breath and the rest was speculation.

Oh, and 2nm is coming.

- A sneak peek at Tiger Lake does show a single-threaded / IPC advantage over Zen 2. (Tom's Hardware)

Single-threaded performance is on the order of 5% better than a Ryzen 4300U, and since this particular Tiger ran at a lower clock speed, IPC is around 10% better. On multi-threaded workloads the 4-core/4-thread Ryzen 4300U was 45% faster than this 2-core/4-thread Tiger Lake chip, so AMD will retain a very comfortable multi-threaded lead even while we wait for Zen 3.

Intel is also expected to catch up on the graphics side with these new chips, but the leaked benchmarks don't say anything about that.

- Salesforce recorded a record quarter and immediately laid off 1000 staff. (Tech Crunch)

Uh. Timing is everything, guys.

- The share price of Fucking Elastic, makers of Fucking Elasticsearch, is at an all-time high. (WCCFTech)

No, I am not at all annoyed with the deliberately-introduced deficiencies of Fucking Elasticsearch. No, I didn't spend yesterday working around a broken-as-designed upgrade that wrecked application features that used to run just fine. Why do you ask?

- Milan will be up to 20% faster than Rome, sort of. (WCCFTech)

Rumours have been pretty consistent that Zen 3 has 10-15% better IPC than Zen 2, with the other 5-10% coming from minor clock speed improvements similar to the slight nudges we saw with the 3800XT.

It looks like L3 cache remains the same, but L2 cache has doubled, which would contribute to those IPC gains and help reduce contention on the new unified L3 cache.

Meanwhile Genoa is due by 2022 with "more than 64 cores", Zen 4, DDR5, and PCIe 5.0.

- Arwes makes your application look like it belongs in the 21st century. (Arwes.dev)

The 21st century as seen in the 1980s, anyway, which - looking at you, 2020 - was more optimistic than accurate.

- ArangoDB 3.7 supports JSON Schemas and other good stuff. (ZDNet)

It's a multi-model database - relational, object, document, graph, whatever - though currently pushing the graph features and its use case as a machine learning back-end, because that is the new hotness this month.

I've followed its progress but not actually used it, so can't say how well it really holds up.

Posted by: Pixy Misa at

01:43 AM

| No Comments

| Add Comment

| Trackbacks (Suck)

Post contains 887 words, total size 7 kb.

Wednesday, August 26

Itsy Bitsy Teeny Weeny Edition

Tech News

- Need 16 cores, 128GB of RAM, and dual built-in 10Gbit Ethernet ports on a mini-ITX board? ASRack Rock has you covered. (Serve the Home)

It's a server board, so rear panel I/O consists of the two 10GbE ports, two USB ports, VGA, and a separate 1GbE management port. The design is server-oriented in other ways as well: It's an X570 board with no chipset fan, so without server-style case fans it's gonna melt.

I'm not sure what the market is for mini-ITX server boards, since a rackmount case has plenty of room for ATX and even larger boards.

- Birds, honestly, kind of dumb. (Ars Technica)

Painting one blade of a wind turbine does seem to help them notice that there is a huge whirling death machine in their flight path, though, reducing impromptu chicken dinner incidents by more than 70%.

- Not beta, just broken. (The Register)

The new version of Firefox for Android is here.

People don't like it.

There's no option to go back to the old version.

Well, it's open source so I suppose building it yourself and side-loading it is sort of an option, though with the current limitations of copy-protection you'll probably be locked out of YouTube even if you do that.

- The upcoming RTX 3090 has been rumoured to cost $1400. Another rumour might explain that if it really does come with 24GB of GDDR6X RAM. (WCCFTech)

I remember being at a computer show years back and sitting down to watch a presentation. They had to reboot the computer running the projector and we saw that it had a video card with 24MB of RAM back in the days when 2MB was typical.

And 24MB is still enough to hold a 24-bit 4K framebuffer.

- The Trump administration is providing a billion dollars in funding for research into AI and quantum computing. (Tech Crunch)

If the Wuhan Bat Soup Death Plague doesn't kill us all, the Nanobot Apocalypse is sure to finish the job.

- The Asus Zenfone 7 has three rear cameras and three front cameras. (AnandTech)

In fact, it has three cameras. They go bziiip and pivot around.

- Asus also has a new 360Hz monitor if you're looking for a reason to buy that RTX 3090. (Tom's Hardware)

Only 1080p, of course. At 4K you're still limited to "only" 144Hz.

- The Microsoft Surface Duo is landing in reviewers' hands right now, and it looks really nice. It looks like a little notebook from back in the days when those were made of paper.

It's striking how much slimmer it is than the folding screen models. At just under 10mm folded up it's still slightly chunky for a 2020 device, but that's just 1mm thicker than my Nexus 7, which doesn't fold unless you really, really want it to.

- I've been looking up some details of 80s graphics chips to figure out what would be reasonable to implement in my imaginary system. Of course I know exactly what the Amiga could do since that was my primary computer for five or six years (an A1000 and then an A3000).

There was a Hitachi chip from the early 80s I was trying to find - turns out I was thinking of the HD63484 from 1984. (Computer.org)

That was followed in 1986 by Intel's 82786 which actually implemented my dual-bus architecture, and Texas Instruments' 34010, which was a graphics-oriented CPU.

Before all of those, in 1982, NEC introduced their uPD7220 (Hackaday)

Since that was a real chip that really existed (and was hugely successful), anything it could do is fair game for my emulator. Also the chip from the original MSX systems, which came out in 1983, right in my fictional time window.

Posted by: Pixy Misa at

11:47 PM

| No Comments

| Add Comment

| Trackbacks (Suck)

Post contains 691 words, total size 6 kb.

Tuesday, August 25

Multiple Dispatch Edition

Tech News

- Implemented a full set of instructions in the experimental VM in Nim - though three quarters of them are just dummies cut-and-pasted to fill out the case statement.

Performance dropped from 80 MIPS with one instruction implemented to 72 MIPS with 32 instructions. And that's without using the pragma options to optimise the case statement. Also using an array for the registers rather than individual variables, which simplifies the instruction dispatch but generates less efficient code.

So it seems to be safely past the point where PyPy had its nervous breakdown.

I might go with a default configuration of 128k each of system RAM and video RAM rather than worrying too much about squeezing things into 64k. The point of an imaginary 10-bit computer is, after all, that you can have more than 64k of linear address space.

I remember thinking about how nice it would be to have a whole 128k of RAM back then. Then of course I got an Amiga and skipped the entire late-8-bit generation.

- Nim imports all the methods of a module when you import a module.

That is, with Python, if you import the time module you get the time by callingtime.time()but with Nim it's justtime().

Python needs to do this because if you import a bunch of modules and they use the same name for some of the methods, it can't tell which version you mean.

Nim, though, uses multiple dispatch. If you have asavefunction that saves image data to a JPG file, and asavefunction that saves a record to a MongoDB database, they have completely different parameters and return types.

Python is dynamically typed so that's not a lot of help. Nim though is statically typed, so it knows which one you mean from the code that calls it.

This makes code less verbose, and makes using modules a more natural part of the language. I'll have to see how it works out in practice.

Also, JetBrains, makers of IntelliJ and PyCharm, are planning to release the first version of their official Nim plugin next month. I've been programming Crystal in VS Code and it is awful.

- TSMC's 5nm node is in production and 3nm is due in 2022. (AnandTech)

Which we basically knew already, but there is new data here as well. The figures given for transistor density - 1.8x going from 7nm to 5nm, and a further 1.7x from 5nm to 3nm - are for logic functions. SRAM scaling is significantly worse.

I originally suspected that the first use AMD would make of 5nm would be to implement even larger caches for their server parts, while another doubling of core counts would be further off. Instead it seems the reverse is more feasible.

That's not until next year at the earliest, anyway. Zen 3 is 7nm.

- And Zen 3+ may also be 7nm. (WCCFTech)

Or on the other hand it may not exist even as a box on a roadmap. Zen was followed by Zen+, but Zen 2 will be followed by Zen 3. People who know aren't talking and the people who are talking don't know.

- Is your Python application too stable? Don't have anything to keep you up at night? Why not write your configuration files in JavaScript? (GitHub)

You can thank me later.

Posted by: Pixy Misa at

11:34 PM

| No Comments

| Add Comment

| Trackbacks (Suck)

Post contains 571 words, total size 4 kb.

Four Frierens And A Funeral Edition

Tech News

- So I rewrote my VM testbench in Nim. With just a minimal framework in place - instruction decode, registers, memory, condition flags, and one instruction - I'm getting 80 MIPS. That's not amazing and I could certainly do better in C++ (and have in the past) but for these purposes it's just fine. The imaginary machine would be lucky to do 1 MIPS downhill with a tailwind, after all.

Nim is nice enough. I like Crystal somewhat more, but Nim is reasonably well-designed and seems reasonably well-implemented. You don't seem to be able to use ternary operators in compound expressions, though, so I'll have to figure out the proper way to evaluate the condition flags.

I did realise something when thinking about Elite on the BBC Micro. Although the imaginary hardware will implement hardware scrolling and sprites (the TI 99/4 had 32 sprites all the way back in 1979), that only helps for 2d games. For 3d what you need - apart from hardware line-drawing - is double-buffering.

And since I'm deliberately limiting the available memory to keep the system conceptually simple, it doesn't have enough memory to double-buffer at full resolution (a whopping 480x270).

The options as I see them are:

- "Well, that game came out in 1985, when the 256k upgrade was available."

- Weird cheats like the Apple IIgs fill mode to make 3d fast enough that you don't notice the flickering.

- Weird cheats like RLL-encoding of the pixel data to reduce memory requirements, which would be horrible to draw into but very effective when there are only a few colours in use.

- Weird cheats like chroma subsampling, which is period legitimate because both the Apple II and the ZX Spectrum did that. (The ZX Spectrum in a rather more organised way, colour in the Apple II's hi-res mode was just plain weird.) There are lots of ways to implement this, some of them easy to do in period hardware, most of them nasty to program for.

- Just use half-resolution - 240x270 isn't completely awful, and it can switch to full-resolution for the controls at the bottom which don't need to be fully re-rendered every frame.

- Drop the pixel clock from 12MHz to 8MHz, reducing the number of pixels per scan line to 320, and pack 5 4-bit pixels into each double-word read instead of 4 5-bit pixels, and finally letter-box it down to, um, oh, right, 256 lines. That's exactly 64k.

- The (imaginary) video chip has dual buses, originally so that the CPU could write directly to video RAM as it would with any other RAM, just with wait states inserted until the VRAM was free. But vice-versa, that allows the video controller to map system RAM for video functions, stealing cycles from the CPU. Still, using 480x270 5-bit mode with double buffering like this would leave 736 bytes free for your 10-bit version of Elite, so it might need to be applied in combination with some other trick.

(This is why I mentioned the video controller would have needed more than 40 pins if it had ever been made - two 10-bit data buses and two 8-bit multiplexed address buses is 36 pins before any control signals or even power and ground.)

- Every ten years, move everything that's done on the client to the server, and everything that's done on the server to the client, and everyone will think you're a genius. (Solovyov.net)

Server-side rendering, huh? Whatever will they think of next?

- We are continuing to work on a fix for this issue. (ZDNet)

I felt a disturbance in the Force, as if millions of children suddenly cried out with joy.

Zoom is down.

Given the nature of computers of that era it might be appropriate to implement all of these and never bother to test what happens if you set all the mode bits at once.

Posted by: Pixy Misa at

12:13 AM

| No Comments

| Add Comment

| Trackbacks (Suck)

Post contains 662 words, total size 5 kb.

Sunday, August 23

Zero Bit Edition

Tech News

- Was getting decent performance from my experimental VM under PyPy, implemented a couple more instructions, and speed absolutely cratered, dropping from 20 MIPS to 0.33 MIPS in one go. In fact, PyPy is half the speed of regular Python now.

It's not at all obvious why this is. I was curious if it was a compiler bug, so I added some more instructions and performance jumped back up to 5 MIPS.

So... Yeah. I might want to find a better language for this sooner rather than later. There's an SDL2 binding for Nim, so that's an option. Or maybe Go. I don't want to use C, and Crystal doesn't fully support Windows yet.

I do understand that tight loops full of indirect lookups and branches are tricky to optimise, but if the compiler is as flaky as this I won't be able to make any progress.

Update: Gave Nim a try. It... Seems to work. It looks like it's well-suited to the task, and it's reasonably quick. It has bounds-checking enabled by default so it's not as fast as C, but that's a really nice feature to have during development. The bounds checking and subrange types remind me a little of Ada.

Update 2: In fact, Nim has features designed specifically for this sort of thing, like a pragma to force it to compile acasestatement as a computedgoto.

- AMD released a retail version of the Ryzen 4000 APU and they're sold out already. (WCCFTech)

It's not clear how much stock they - they being stores in Akihabara in this case - had on hand, but whatever they had they sold on the morning of the first day of release.

- As mentioned previously, AMD is reportedly up to something weird with their next-gen APUs. The high-performance Cezanne chips will be Zen 3 with Vega graphics, while the low power Van Gogh chips will be Zen 2 and RDNA 2. (WCCFTech)

Presumably the development timelines of the CPU and GPU cores simply didn't line up. The new Xbox and PlayStation parts are also Zen 2 with RDNA 2.

Van Gogh will support LPDDR5 and TDPs down to 7.5W, plus a new AI core of some sort.

- A handy guide to de-Googling your life.

Some things are easier than others. I'm happy with DuckDuckGo 95% of the time, but that last 5% is a sticking point.

- Only two boxes of gluten-free chicken nuggets? Well, since they were showing as out of stock and I was expecting zero to arrive with this delivery, that's actually an improvement.

- Recycling is a problem. (Wired)

There are about $3 of recoverable raw materials in a dead solar panel. It costs about $12 to recover those materials. It costs about $1 to just chuck the thing in a landfill.

Posted by: Pixy Misa at

11:05 PM

| No Comments

| Add Comment

| Trackbacks (Suck)

Post contains 498 words, total size 4 kb.

11 Bit Edition

Tech News

- As mentioned in the comments on yesterday's post, I did a very quick benchmark on a minimal CPU emulator in Python. On standard Python I got about 3 MIPS, which is more than a 1983 computer would deliver, but not nearly enough when I take into account also emulating the graphics, sound, and I/O chips. (Plus the fact that it will certainly slow down once I handle things like precisely setting the bits in the F register after every operation.)

Same code in PyPy ran at around 200 MIPS. So that's how I'll build the prototype. If that's successful, the real version will be... I don't know. Maybe Nim? Crystal if they get Windows support working?

I'll see if I can implement it once with options for anything from 9 to 13 bits. I want to have more than 64k directly addressable (without segments or bank switching) but still have somewhat realistic limitations.

9 bits is pretty good there, since that lets you have something very similar to the Commodore 128, but with all the RAM and ROM immediately accessible with no fussing about.

Also updated the imaginary architecture after checking and discovering that the cycle time for 120ns RAM was 220-230ns, not 200ns as I'd half-remembered.

I'd wanted a 5MHz clock because that works out neatly for the video resolution I have in mind, but that now seems infeasible for a home computer of that period. The idea instead is that rather than using a 200ns memory cycle, it would have used a 400ns memory cycle but used page mode to read two sequential words in certain modes, including the critical video updates.

The Amiga 1200 used this trick, keeping the same bus cycle as the original Amiga but reading two 32-bit words at a time instead of one 16-bit word.

I looked up the instruction and memory timing of the 6502 to make sure I'm being reasonable with my new 2.5MHz fictional CPU, and it turns out that thing was fucking weird. (Xania.org) The 6502 accesses memory on every cycle even if it doesn't yet know what address it wants to read or what value it needs to write. That means that it will sometimes read the wrong address first, and then the correct one, or write the wrong value followed by the correct one.

Since that article is discussing the creation of a cycle-accurate emulator for the BBC Micro, and that's an interesting and powerful 8-bit system that I'm not very familiar with, I did some reading on the specifics of its hardware.

Turns out... It had a 250ns memory cycle. In 1981. A 2MHz 6502 which as we just noted accesses memory on every cycle, and interleaved access by the video controller with no interference or wait states on either chip.

The Wikipedia article notes that the system needed one specific multiplexer device from National Semiconductor to handle this, and compatible parts from other companies didn't work. They shipped 1.5 million units without ever figuring out why.

Reading up on the BBC Micro gives me a specific goal, though: This design should be able to run a game like Elite in shaded rather than wireframe mode. Even if it's generally less powerful than the Amiga, it needs to be able to do that.

- The nearest star-like-object (SLOB) system to ours is still Alpha Centauri. How dull.

After that it's the roundabout at Barnard's Star, and then Luhman 16, a brown dwarf binary discovered in 2013.

I mention this because a team of volunteers has discovered 95 more nearby brown dwarfs (Space.com) including one with a surface temperature of -23C which is rather chilly for a star, even a failed one.

- Apple has kicked the Wordpress iOS app out of the App Store for not providing non-existent in-app purchases. (The Verge)

The Wordpress iOS app is a generic app for the Wordpress API. The source code is all GPL. It offers no in-app purchases via the App Store or otherwise, but was banned anyway.

- Intel is working on 224G PAM4 transceivers. (AnandTech)

And also on 112G NRZ transceivers - 112G exists now, but currently uses PAM4.

This new generation would work for PCIe 8.0. PCIe 3.0 which most of us are still using runs at 8G. PCIe 2.0 ran at 5G but used inefficient 8b/10b encoding; PCIe 3.0 is much more efficient so at 8G it is about 98% faster than 2.0.

(USB 3.1 gen 2 does the same thing with encoding but actually does double the clock speed, so it is around 2.4x the speed of USB 3.0.)

This new standard would deliver the equivalent of a full x16 PCIe 4.0 slot with just four pins.

Or to put it another way, a PCIe 8.0 x16 slot could read the entire 64k system RAM of my imaginary computer in around 150ns.

- Microsoft Flight Simulator 2020 is out and it requires all the hardware. (Guru3D)

You'll need the fastest CPU and graphics card you can find, 32GB RAM, and 150GB of available SSD storage. And a fast internet connection if you don't want to be waiting for literal days while it downloads, because that 150GB is the initial install size.

Got all that? Well, with your 9900K and RTX 2080 Ti you'll get 51 FPS at 1080p.

Unless you decide to visit New York, in which case frame rates will plummet to about a third of that.

Posted by: Pixy Misa at

12:44 AM

| Comments (4)

| Add Comment

| Trackbacks (Suck)

Post contains 956 words, total size 7 kb.

Saturday, August 22

10 Bit Edition

Tech News

- The Intel 80186 arrived in 1982, with 55,000 transistors running at 6MHz in a 68-pin PLCC package. (Wikipedia)

Why does this matter? Well, it doesn't, not really.

Just that since I'm unlikely to make any progress on my hardware retrocomputer any time soon, I've been looking at making an emulator for an imaginary computer from the early 80s. And since it's something that didn't exist, it needs to make sense. It's intended to be something that would logically have come after the Commodore 64 and Sinclair ZX Spectrum, but before the new era of powerful 16-bit systems like the Mindset, Amiga, and Atari ST. That puts it in 1983, or at the latest early 1984.

One thing I figured out is that the imaginary video controller chip I envision would need more than 40 pins, which means either a huge 64-pin DIP or a 68-pin PLCC. Since 68-pin PLCCs obviously existed in that timeframe, I can use an imaginary one with a clear conscience. (The Amiga, by comparison, divided video control into two 48-pin DIPs.)

My imaginary system is going to have a 10-bit CPU, because (a) that's better than 8 bits without throwing everything wide open, and (b) as far as I can tell no-one has ever done that. Plenty of 12-bit systems, zero 10-bit. The closest I could find are John von Neumann's IAS machine (40 bit) and the F14 flight computer (20 bit).

I'll post more details once I get some code that works.

- The Zotac Zbox QCM7T3000 is an almost perfect mini workstation. (Tom's Hardware)

It has an 8-core 45w i7 10750H processor and a 6GB Nvidia Quadro RTX 3000 packed into a case that measures just 8" x 8" x 2.5". And it's almost perfectly symmetrical, with the I/O ports all lovingly paired up EXCEPT THAT THE BACKING PLATES FOR THE TWO ETHERNET PORTS ARE SUBTLY DIFFERENT.

Well, one is 1Gbit and the other is 2.5Gbit, but still.

Two SO-DIMMs, so up to 64GB RAM, one M.2 slot, and one 2.5" drive bay.

- If I suddenly say something has 64K of RAM rather than 64GB, sorry, my mind has been in the early 80s the last few hours.

- Lightroom for iOS considered harmful. (PetaPixel)

The latest update deleted everyone's photos.

It's not supposed to do that.

- Buried in Intel's recent Xe graphics announcements is the unspoken admission that no-one is going to use this for playing games. (AnandTech)

Intel is talking up the SG1, a new video streaming accelerator for datacenters. It will contain four of the Xe chips that will be used in the desktop DG1 card. The DG1 itself is viewed as a developer platform and not a consumer card.

But Intel have at least announced that the Linux Xe driver code will be open source, like AMD and very unlike Nvidia.

- The upcoming antitrust battles may spell the end for iOS as we know it. (ZDNet)

And I feel fine.

- The Synology DS1520+ is still gigabit. (BetaNews)

You're better off in most cases just having someone dump four used DS1812 units on your doorstep. That happens to everyone, right?

Posted by: Pixy Misa at

01:47 AM

| Comments (10)

| Add Comment

| Trackbacks (Suck)

Post contains 538 words, total size 5 kb.

55 queries taking 0.2315 seconds, 395 records returned.

Powered by Minx 1.1.6c-pink.