Now? You want to do this now?

I have a right to know! I'm getting married in four hundred and thirty years!

I have a right to know! I'm getting married in four hundred and thirty years!

Tuesday, June 20

Well, That's A Thing...

Posted by: Pixy Misa at

06:20 PM

| Comments (5)

| Add Comment

| Trackbacks (Suck)

Post contains 4 words, total size 1 kb.

Thursday, June 15

Eaten By Moths

I mentioned in my Winter Wrapup that AMD graphics cards have become almost impossible to find, because they're all being bought by cryptocurrency miners. If you live somewhere with reasonably priced electricity, the pay-back time for a Radeon 570 or 580 is about 3 months, and getting shorter as the currencies increase in value.

I mentioned in my Winter Wrapup that AMD graphics cards have become almost impossible to find, because they're all being bought by cryptocurrency miners. If you live somewhere with reasonably priced electricity, the pay-back time for a Radeon 570 or 580 is about 3 months, and getting shorter as the currencies increase in value.

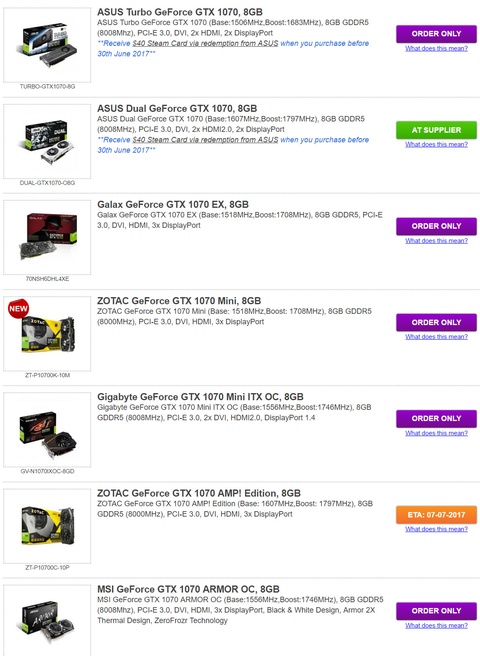

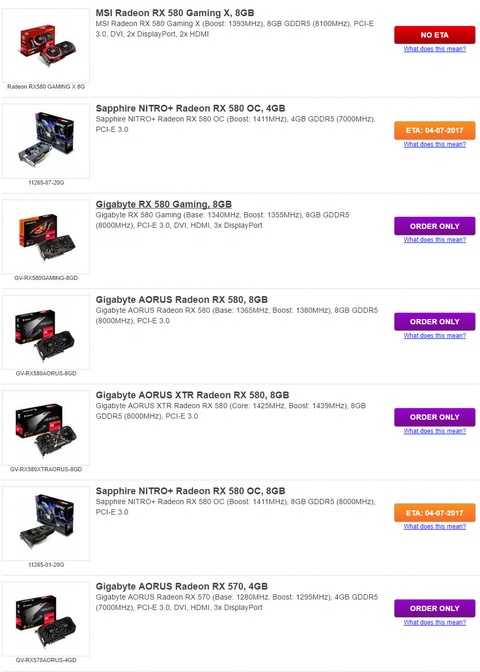

nVidia's cards are less efficient at mining, but they're still more efficient than an AMD card you don't have, so the GPU blight is spreading. A couple of weeks ago, the GTX 1070 (a very nice card) was readily available. Now:

If this keeps up, the Xbox One and Playstation 4 will be next to go - both have AMD GPUs.

Posted by: Pixy Misa at

11:19 AM

| Comments (25)

| Add Comment

| Trackbacks (Suck)

Post contains 118 words, total size 1 kb.

Monday, June 12

Tech Industry Winter Wrapup

It's been a busy time in the tech industry, with Computex, WWDC, and E3 following hard on each other's heels, so I thought a quick wrapup of the top stories might be useful to those who don't have the time or inclination to obsess over this stuff, but are nonetheless mildly interested.

The Microsoft Xbox One X, at 6TFLOPs so powerful that its graphics look better than reality.*

Dell's Inspiron 27 7000 is based on the 8-core Ryzen 7, with twin overhead cams and optional supercharger.*

Popular Youtube tech reviewer Linus Somebody spends 14 minutes saying "WTF Intel?"

The iMac Pro has so many cores that it can be seen from the International Space Station.*

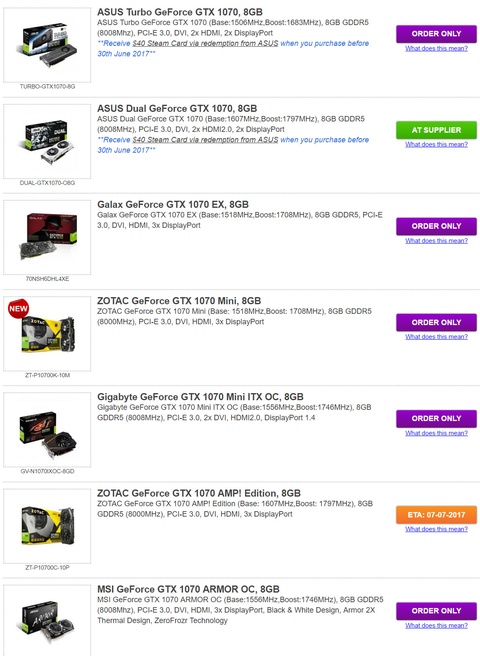

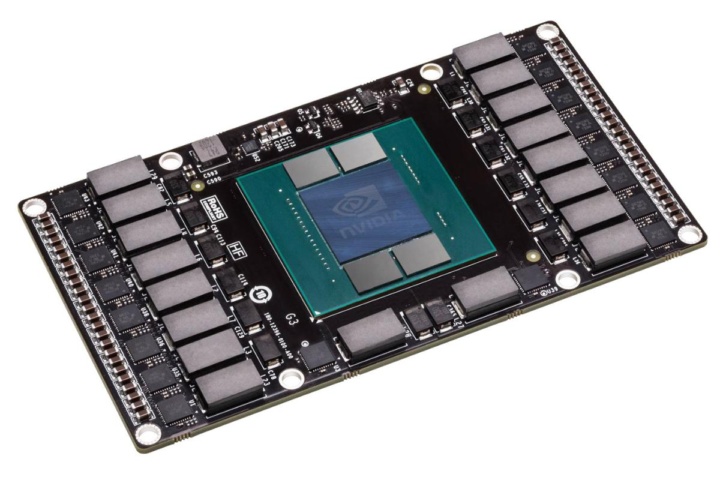

nVidia's Volta GPU is the largest chip ever manufactured. For scale, a row of Grayhound buses is parked along each edge of this picture.*

It's nonetheless an exciting development for anyone working in machine learning, and it certainly had a positive effect on nV's share price.

AMD's entire production line captured by Bitcoin pirates.*

One of the reasons AMD is having such a huge year is that they've spent most of the past five years stuck at the old 28nm process technology (called a "node"). The 20nm node that was supposed to replace it in 2014 wound up dead in a ditch* with only Intel managing to make it work (because they moved to FINFETs earlier than anyone else).

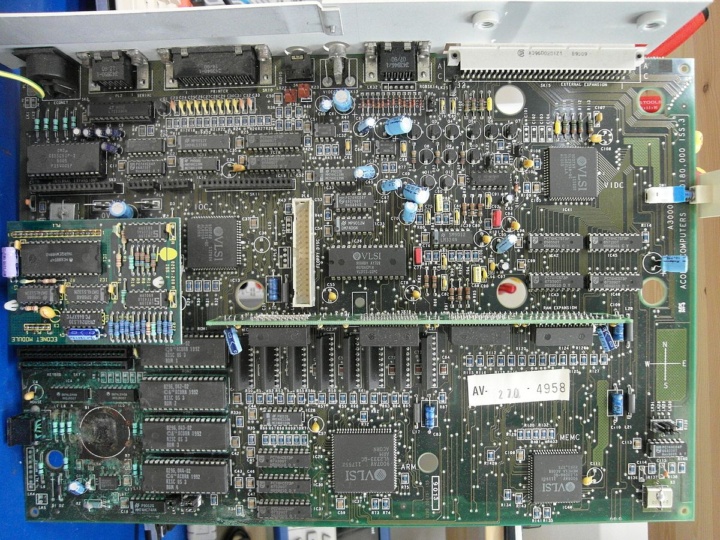

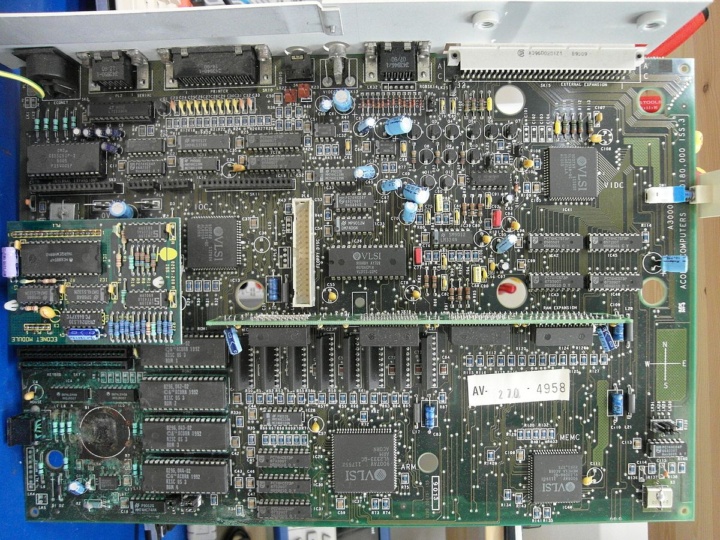

An early ARM motherboard from an Acorn Archimedes A3000. Note that none of the chips have fans, or even heatsinks. That's because these machines were cooled by photino radiation, before this was banned for causing birth defects in igneous rocks.*

Photo by Binarysequence, CC BY-SA 3.0

* Possibly not literally true.

It's been a busy time in the tech industry, with Computex, WWDC, and E3 following hard on each other's heels, so I thought a quick wrapup of the top stories might be useful to those who don't have the time or inclination to obsess over this stuff, but are nonetheless mildly interested.

I'll break it down by company, starting with

AMD: Eight Is The New Four

It's shaping up to be AMD's biggest year for a decade. So far they've released their Ryzen R7 and R5 processors and RX 500 series GPUs, announced the new Vega GPU and Epyc server processors for release this month, launched the ThreadRipper high-end workstation CPU, partnered with Apple for the updated iMac and Macbook models and the brand new iMac Pro, and with Microsoft for the Xbox One X (aka the Xbonx).

They've already completely upset the industry by selling 8-core CPUs at 4-core prices, and they'll be doubling down in the second half of this year - literally - by selling 16-core CPUs at 8-core prices and 32-core CPUs at 16-core prices. Look for 16 cores at less than $1000 and 32 cores at under $2000, dramatically cheaper than Intel's pricing (if Intel even had a 32-core chip, which they don't).

AMD's entire revenue stream is less than Intel's R&D budget, so they had to get clever to pull this off. The entire line of CPUs, from 4 cores all the way up to 32, is based on a single universal design with two clusters of 4 cores. A chip with no defects can be sold as an 8-core part, with one or two defects as a 6-core part, with more defects as a 4-core part. Reports are that AMD is actually getting a yield of 80% defect-free chips, so many of the 6-core and 4-core parts probably work perfectly and just have some parts of the chip switched off.

For their 16-core workstation chips they simply wire two of these standard 8-core modules together. The 32-core server parts likewise are made up of four 8-core modules. And if you need serious horsepower, you can plug two 32-core CPUs into a server motherboard for 64 total cores supporting up to 2TB of RAM.

For their 16-core workstation chips they simply wire two of these standard 8-core modules together. The 32-core server parts likewise are made up of four 8-core modules. And if you need serious horsepower, you can plug two 32-core CPUs into a server motherboard for 64 total cores supporting up to 2TB of RAM.

That standard 8-core module also has a bunch of other features, including SATA ports, USB ports, network controllers, and 32 PCIe lanes. A two-socket Epyc server, without needing any chipset support, includes 128 available PCIe lanes, 32 memory slots, 16 SATA ports, 16 USB 3.1 ports, and a couple of dozen network controllers.

In the second half of this year AMD will be adding mobile parts with integrated graphics, a desktop chip also with integrated graphics, and a chip designed specifically for high-end networking equipment like routers and 5G wireless base stations.

In the second half of this year AMD will be adding mobile parts with integrated graphics, a desktop chip also with integrated graphics, and a chip designed specifically for high-end networking equipment like routers and 5G wireless base stations.

Intel: Nine Is The New Seven

Intel has been in the lead with both chip design and manufacturing the last few years, and seems to have been caught napping with the success of AMD's Ryzen and the announcement of ThreadRipper. They've fired back with their Core i9 professional platform, but it's rather a mess. The low-end chips require a high-end motherboard they can't fully use; the mid-range chips require a high-end motherboard they can't fully use; the high-end chips are nice, but very expensive; and the super-high-end chips seem to have suffered a sudden total existence failure* - the 12 to 18-core parts cannot be found anywhere, not even as detailed specifications. We have a price for each, and a core count, and that's it.

Apple: This One Goes Up To Eighteen

The first and last hours of Apple's interminable WWDC keynote were stultifying, with such landmark announcements as support for Amazon video (like everyone else) and a wireless speaker (like everyone else).

In between they finally refreshed the iMac to current hardware - Intel's current generation CPU, AMD's current generation graphics, the same Thunderbolt 3 that everyone else has had for eighteen months, and DDR4, which everyone else has had for even longer.

In between they finally refreshed the iMac to current hardware - Intel's current generation CPU, AMD's current generation graphics, the same Thunderbolt 3 that everyone else has had for eighteen months, and DDR4, which everyone else has had for even longer.

Some welcome changes in that the specs are definitely better, prices are lower, screens are even better than before (and the screens on the current range of iMacs are amazing).

And then they announced the iMac Pro. Same 27" 5K screen as the regular model, but with an 8-core CPU on the low-end model. High end model has 18 cores, up to 128GB RAM, a 4TB SSD, and an AMD Vega GPU with 16GB of video RAM. Also, four Thunderbolt 3 ports, four USB 3.1 ports, and 10 Gbit ethernet.

It starts at $4999, which is awfully expensive for an iMac, but Apple claimed that it still works out cheaper than an equivalent workstation from anyone else. I configured a couple of systems from Dell and Lenovo, and I have to admit that Apple is right here. It costs no more, and possibly less, even though it includes a superb 5K monitor.

On the other hand, not one thing in the iMac Pro is user-upgradable. That's kind of a bummer.

On the other hand, not one thing in the iMac Pro is user-upgradable. That's kind of a bummer.

nVidia: Bringing Skynet To Your Desktop, You Can Thank Us Later

nVidia have been a little quieter than their rivals at AMD, though more successful with their graphics parts so far - AMD's Vega is running several months late.

Their biggest announcement recently is their next generation Volta GPU, which delivers over 120 TFLOPs (sort of), and, at over 800 mm2, is the biggest chip I have ever heard of.

That "sort of" is because the vast majority of the processing power comes in the form of low-precision math for AI programming, not anything that will be directly useful for graphics. And such a large chip - more than four times the size of AMD's Ryzen CPU parts - will be hellishly expensive to manufacture.

Their biggest announcement recently is their next generation Volta GPU, which delivers over 120 TFLOPs (sort of), and, at over 800 mm2, is the biggest chip I have ever heard of.

That "sort of" is because the vast majority of the processing power comes in the form of low-precision math for AI programming, not anything that will be directly useful for graphics. And such a large chip - more than four times the size of AMD's Ryzen CPU parts - will be hellishly expensive to manufacture.

It's nonetheless an exciting development for anyone working in machine learning, and it certainly had a positive effect on nV's share price.

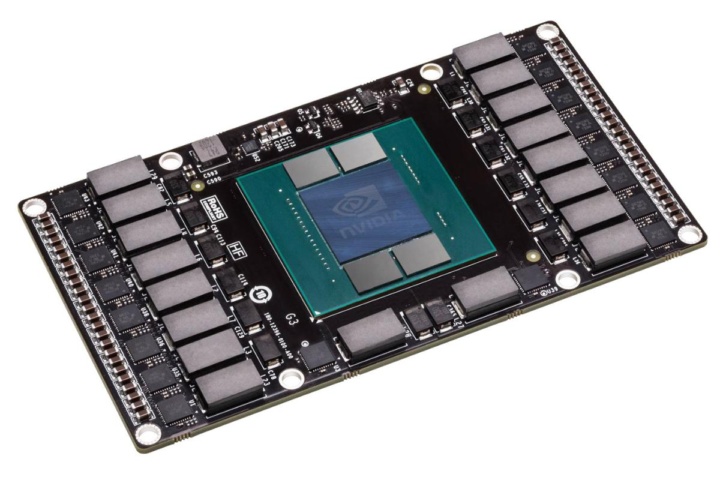

Speaking of graphics, now is not a good time to be trying to buy a new graphics card, because there aren't any. Particularly with AMD, but the shortage is starting to affect nVidia as well. A bubble in cryptocurrency prices, especially for a new currency called Etherium, has triggered a virtual goldrush that has had miners buying every card they can get their hands on.

AMD cards are preferred for this for precisely the same reason nVidia are preferred by most gamers: AMD's design is more general-purpose, less specifically optimised for games. For Etherium mining, AMD's cards are roughly twice as effective as an equivalent nVidia card.

AMD cards are preferred for this for precisely the same reason nVidia are preferred by most gamers: AMD's design is more general-purpose, less specifically optimised for games. For Etherium mining, AMD's cards are roughly twice as effective as an equivalent nVidia card.

Result: No ETA.

IBM: The Next Next Next Generation

One of the reasons AMD is having such a huge year is that they've spent most of the past five years stuck at the old 28nm process technology (called a "node"). The 20nm node that was supposed to replace it in 2014 wound up dead in a ditch* with only Intel managing to make it work (because they moved to FINFETs earlier than anyone else).

Last year the rest of the industry collectively got their new process nodes - called 14nm or 16nm depending on who you talk to, but all based on FINFETs and all far superior to the old 28nm node - got their new processes on line and started cranking out chips. This means that AMD can make 8-core parts that are faster, smaller, and more power-efficient than anything they had before, and do it cheaper. They were years behind Intel and caught up in a single step.

IBM just announced the first test chips on a brand new 5nm node. To put that in perspective, they could put the CPU and GPU of the top-of-the-line model of the new iMac Pro on a single chip, add a gigabyte of cache, and run it at low enough power that you could use it in an Xbox.

IBM provided us with this die photo of their 5nm sample chip. Unfortunately it is invisible to the naked eye.*

They're planning to follow up with a 3nm process. This is pretty much the end of the road for regular silicon; we have to go to graphene or 3D lithography or quantum well transistors or some other exotic thing to move forward from there. But the amazing stuff we're getting right now is at 14nm, so 3nm is not shabby at all.

IBM just announced the first test chips on a brand new 5nm node. To put that in perspective, they could put the CPU and GPU of the top-of-the-line model of the new iMac Pro on a single chip, add a gigabyte of cache, and run it at low enough power that you could use it in an Xbox.

They're planning to follow up with a 3nm process. This is pretty much the end of the road for regular silicon; we have to go to graphene or 3D lithography or quantum well transistors or some other exotic thing to move forward from there. But the amazing stuff we're getting right now is at 14nm, so 3nm is not shabby at all.

ARM: We're Here Too!

ARM sells a trillion chips a year* dwarfing the combined scaled of Intel, AMD, and nVidia, but they're constrained by power and price and can't make huge splashy announcements of mega-chips like nVidia's Volta or AMD's Vega and Epyc.

Nonetheless, they've come up with new high-end and low-end designs in the A75 and A55 cores. The A75 replaces the A72 and A73 cores, which are alternative designs for a high-performance 64-bit core with different strengths and weaknesses; the A75 combines the best features of both to be faster and more power-efficient than either.

The A55 is a follow-up to the ubiquitous A53, which is found in just about every budget phone and tablet and many not-so-budget ones. The A53 is a versatile low-power part with decent performance; the A55 is designed to improve performance and power efficiency at the same time. It's not an exciting CPU, but ARM's manufacturing partners will ship them in astronomical volume.

The other thing to note about these new CPUs is that again, eight is the new four. Most phone CPUs currently have cores grouped into fours - commonly four fast cores and four power-saving cores - because that's as many as you could group together. The A75 and A55 allow you to have up to eight cores in a group. Which changes the perspective a little, because eight A75 cores is getting into typical desktop performance territory.

The other thing to note about these new CPUs is that again, eight is the new four. Most phone CPUs currently have cores grouped into fours - commonly four fast cores and four power-saving cores - because that's as many as you could group together. The A75 and A55 allow you to have up to eight cores in a group. Which changes the perspective a little, because eight A75 cores is getting into typical desktop performance territory.

* Possibly not literally true.

Posted by: Pixy Misa at

07:25 PM

| Comments (10)

| Add Comment

| Trackbacks (Suck)

Post contains 1777 words, total size 13 kb.

<< Page 1 of 1 >>

82kb generated in CPU 0.0474, elapsed 0.059 seconds.

24 queries taking 0.0491 seconds, 74 records returned.

Powered by Minx 1.1.6c-pink.

24 queries taking 0.0491 seconds, 74 records returned.

Powered by Minx 1.1.6c-pink.